Disinformation, privacy violations, information overload, cyber bullying, financial malpractice… when it comes to modern technologies and the modern technology industry, professionals have a lot to answer for. It is a truism to state that technology has changed the world around us, and the impacts are not as utopian as the idealist thinkers of 30 years ago would have had us believe.

More technology leaders are waking up to the profound effects that their products can have on society. Although many of these effects are positive and should be welcomed, there are some practices which violate basic human rights, and perhaps have consequences that are inherently harmful for societies that value democracy and autonomy.

Technology ethics is become a market necessity. As well as generating a profit, technology companies want to make sure the impact that they have on the rest of society is a net positive.

The question is, what does this actually mean? And what are the practical steps that can be taken to become ‘ethical’? In this blog post I’d like to explain some of my thinking around this topic and give you some practical next steps to embed ethics in your organisation.

What does ‘ethics’ mean?

In an excellent introduction to ethics, ‘Being Good’, Simon Blackburn writes: “the ethical environment… is the surrounding climate of ideas about how to live.” I like this definition because it encompasses many meanings of ethics. It shows that ethics is a study of what it means to live in a community, as well as live with yourself.

This definition also shows that ethics is somewhat dependent on the culture and community that you are in. This could be temporal, geographical or social. What would have been ethical 200 years ago, might not be ethical today. We live very differently in the UK compared to other European and international countries. There are different schools of thought on how to live in the same city, university and regions.

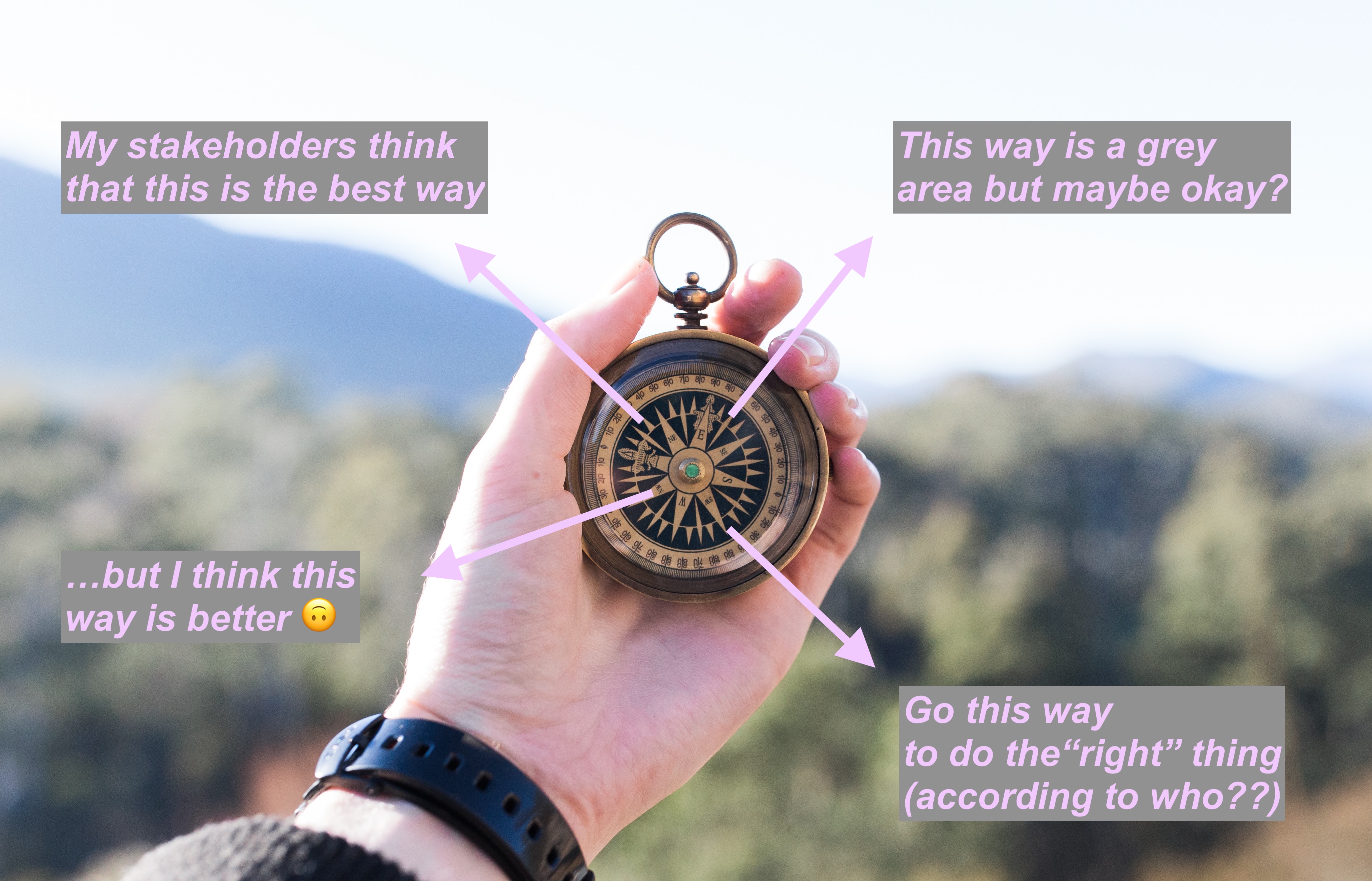

If someone states whether something is or is not ethical, then you’ve always got to ask: ‘according to which ethical system?'

However, although there are cultural differences, it is important not to dismiss ethical problems. It is never the case that ‘anything goes’. Just because it will be very hard to please everyone globally with your decided ethics, does not mean that you should not try and work hard to ensure that your product can wholly benefit the community of people who will use it, and the wider community who may be affected by it.

Most importantly, if someone states whether something is or is not ethical, then you’ve always got to ask: ‘according to which ethical system?’ Is it according to a holy text, or the human rights act or a set of community principles?

What could an ethical code look like?

Many professions have documented ethical codes. Perhaps the most notable example is medical ethics. Other documented ethical codes come from religious texts, or perhaps legal texts like the human rights conventions. Some ethical codes are undocumented, and just exist as cultural norms within a community. These communities could be small, like a family, or large, like a nation or an international group.

Google AI: without an ethics board. In April of 2019, Google dissolved it’s external AI ethics board after just one week. Should the board be this easy to dissolve, and can we trust Google’s pledge to to, for instance, not help with the development of AI weaponry?

Google AI: without an ethics board. In April of 2019, Google dissolved it’s external AI ethics board after just one week. Should the board be this easy to dissolve, and can we trust Google’s pledge to to, for instance, not help with the development of AI weaponry?

One of the technological fields that has had the most attention when it comes to ethics is artificial intelligence. At the time of writing, at least 84 frameworks have been published outlining an ethical code for AI. This is a positive development, however, it is not enough to embed ethical culture. Brent Mittelstadt states this is for the following reasons*:

- Technology professionals do not align their goals with the public interest. Instead, they are given motivations which align with company goals. Research from Data & Society states that these motivations are often driven by profit factors. This means that speed is prioritised above all else, where many believe that there are distinct problems and distinct solutions; a system where professionals believe that because they are fantastic engineers, then they must be fantastic ethicists. What this creates is an unjustified belief in meritocracy. **

- Software engineering is a relatively new profession. Consequently, there is little precedent as to what makes a ‘good’ or ‘bad’ practitioner. Compare this with medical ethics where there are thousands of years of precedent.

- There are few proven methods that translate principles into practice. You may value ‘freedom’ or ‘privacy’, but have little idea of the actions which will support these abstract ideals.

- There are few sanctions for developers if they do not adhere to these vague requirements.

So yes, do document your principles and ethics. But you must recognise that this is only the first step. When I teach individuals and companies ethics by design, I try to ensure that ethics becomes part of the companies process and practice. It has to be something that the whole company takes part in. I recommend beginning with an audit to ascertain the ethical culture that company is in. This research includes:

- Sourcing the international and national laws with which the company must be compliant.

- Centralising internal documents which outline the company mission and values.

- Discovering and discussing the ethics that all employees try to embed into their working practice.

- Studying the ethics of the end-user. This refers both to the ethics of your customers, the users your customers are serving and the wider community who might be impacted by your product.

- Reviewing philosophers’ and academics’ views on ethics related to that technology.

Ethics is a practice and not a destination.

Although the end product may be a documented code, you are looking to achieve the following:

- An engineering environment where ethics are prioritised just as much as commercial considerations in the product design and management process.

- A working environment where all employees are empowered with the language of ethics so they have greater resource to make decisions with an ethical focus.

- Transparent communication practices. This means that a company’s ‘walk’ reflects the company’s ‘talk’. This applies to both internal and external communications and should also involve an easy way for externals and internals to give their feedback to decision makers.

- A consistent ethic. If the company decides upon a certain set of values and relevant applications of these values, these must be consistently applied across the company.

Ethics is a practice and not a destination. What’s more, this is the beginning of a new applied discipline. We cannot be sure what works and does not work yet. The most important thing is that we collaborate and try to make technology products that work for all members of society.

What can you do now?

It’s always good to begin by reading and researching. My recommended reading list is below. Please use these as a launchpad to find other academics and professionals working in this area.

Introduction to ethics

- Being Good by Simon Blackburn

- Ethics: the Fundamentals by Julia Driver

Key texts on debates around ethical technology

- Weapons of Math Destruction by Cathy O’Neil

- Stand Out of Our Light by James Williams

- Surveillance Capitalism by Shoshana Zuboff

- Re-Engineering Humanity by Brett Frischmann and Evan Selinger

- Code: Version 2.0 by Lawrence Lessig

*These are paraphrased from ‘Principles alone cannot guarantee ethical AI’. Although this paper was written about AI in particular, I think it these 4 takeaways can be applied to many technologies and technology professions.

**This paper is called “Owning Ethics: Corporate Logics, Silicon Valley, and the Institutionalization of Ethics” and can be accessed here.

Alice Thwaite is the founder of Hattusia, a technology ethics consultancy and the Echo Chamber Club, a philosophical research institute dedicated to understanding how information environments can be healthy and democratic in a digital age. She teaches an ethics by design evening workshop at Experience Haus and General Assembly in London. Her twitter is @alicelthwaite and her email is alice@echochamber.club.

Many thanks to Georgia Iacovou for her input and edits.

Special thanks to Andrew Strait.