Don’t blame technology for all the world’s problems — we’re just humans full of #emotions. It’s our fault.

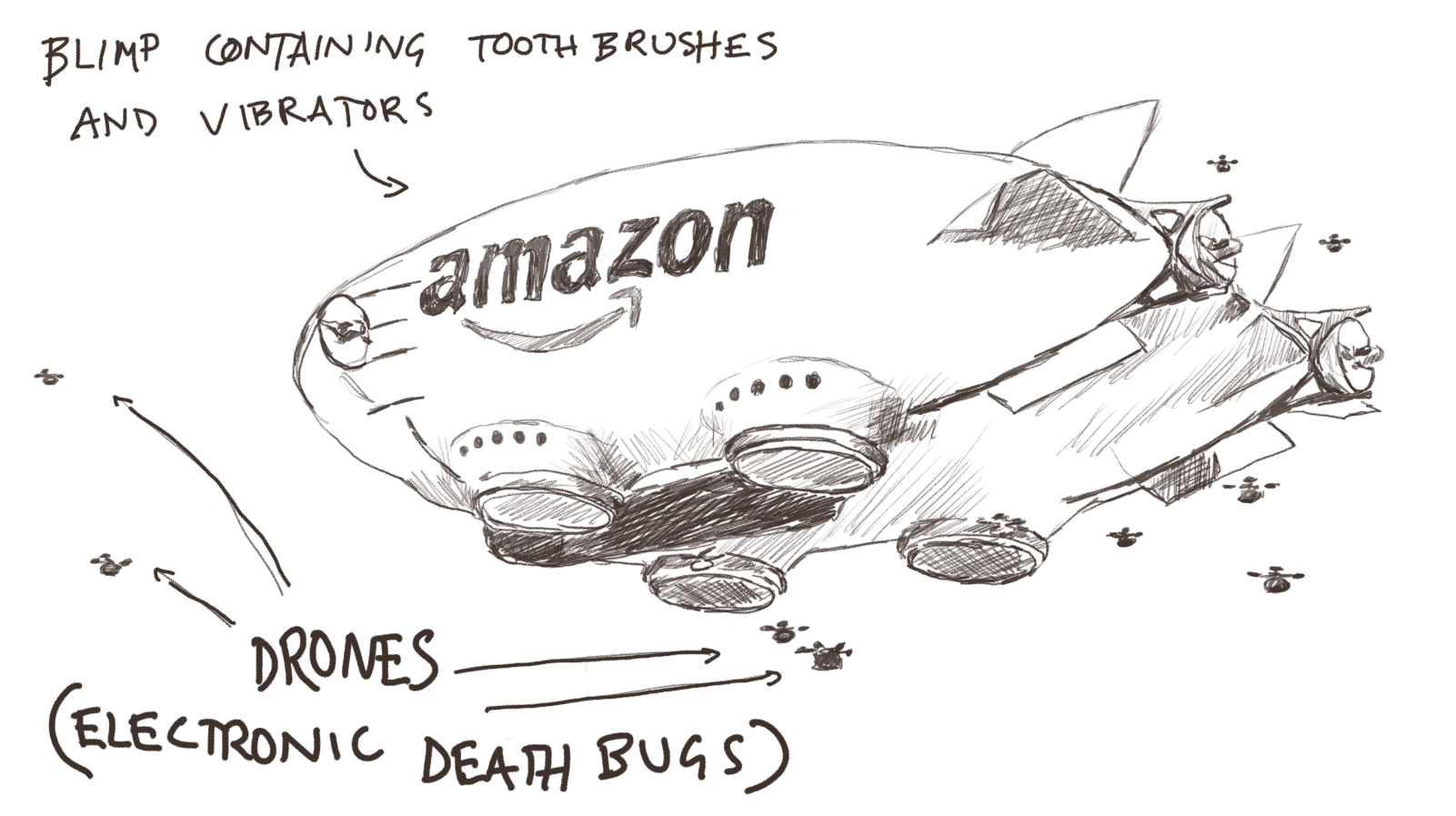

A few weeks ago, this blimp happened

And everyone was scared of it, even though it poses absolutely no threat whatsoever. All I can see is a fairly efficient way of delivering Amazon packages without polluting the earth even more. All anyone else could see was the beginning of the end of the world. Why? Because, it seems, there is a strange unreasonable fear of ‘technology getting out of control’. No. Humans are getting out of control.

The video of the Amazon blimp churning out delivery drones is not real. It’s just a really good fake of a very believable landscape. One of the reasons why it inspired so much fear is because people really can see this happening. Again — there’s nothing scary about a blimp that helps deliver packages, just FYI.

It’s sad that we humans, the very controllers of technology, fear technology. It’s hard to pinpoint exactly why this is, but I think it’s something to do with our emotions. This very short quiz that I already filled in for you should provide an adequate reality check:

-

Humans are governed by:

❌ Lizard people

❌ Governments…? (no wait, these are human too)

✅ Emotions

-

Technology is governed by:

❌ Uh… god?

❌ Bezos and Zuckerberg (so close, because they are…)

✅ HUMANS

If you don’t understand, just look again at the tweet I linked above; all the responses to this fake blimp were entirely based on emotion. This article called the fake blimp ‘terrifying as hell’ and then explained that Amazon were, perhaps, at some point, planning on maybe turning this into a real thing. What would the real thing do? Oh yeah… deliver packages. I’m shaking.

Before we dive in, consider any time you feel like you’ve been ‘manipulated’ by technology. That is, technology controlling you instead of you controlling it. That technology was still developed by humans. Humans are experts at manipulating other humans. It’s just that doing it with technology somehow feels wrong, and this is where our emotions come in.

Example: Advertisements have always manipulated us in one way or another, and at one point those were just static images in magazines. Now we have all kinds of technology which makes adverts happen in a really fast and efficient way. Adverts still exist to achieve the same thing as they always have — they’re just ‘better’. And that makes us feel worse. Like Betty Draper in this recent article about living in a panopticon.

Drones are scary aren’t they? Ban drones, I think.

Now, I think having emotions is a good thing. But what a lot of people forget to do is admit that they have them, and then they jump to silly conclusions which turn into shit headlines or shoddy regulations or widespread panic.

So look at it this way: drones are indeed scary — when they’re killing people. Humans are also scary when they kill people… aren’t they? But humans can be really nice when they help people. Drones can also be used to help people. And who, ultimately, controls drones? Yes, it’s humans. Humans control drones. Humans decide what the drones do. Humans decide if drones should kill people or help people.

Drones are most definitely the best example to illustrate my point because they are not a new piece of tech — they are simply being used in new ways. Those ways are good and bad and confusing. Here’s a handful of uses:

💣 To kill people efficiently in a war, while the pilot stays on the ground in a remote location. People argue this is great for the pilot but umm please don’t forget the people on the other side who are actually being killed? Turkey seem to be weirdly proud of their shiny new set of killer drones, one of which has been signed by Erdogan himself.

💊 To deliver medical supplies to places that need it, and are otherwise hard to get to. Companies such as Zipline have been working in Rwanda to get medicine and blood and other doctor-items over remote mountains and rivers. This requires no infrastructure because they are drones and they just fly in, drop off, and leave. Almost as if that’s what they’re designed for…

🍣 Delivering rather small amounts of gourmet food quite short distances in Finland, only. Which isn’t that groundbreaking or that useful but it’s still a thing that drones can do, yes.

🚨 For enhanced surveillance and security, which is DroneSense’s intended use. Their website explains that using their technology makes it easier to save lives, which I’m sure it does. There’s obviously comfort in knowing that there are hip new ways of catching baddies before they ever come anywhere near you, but giving people access to such sophisticated levels of surveillance really blurs the line between protecting people and just plain spying on them.

🐘 To catch poachers in the wild, when they are still miles away from the animals. These drones essentially fly around and employ the use of image recognition, which is how they can tell that someone is a poacher and not an elephant. Catching poachers is a good thing, of course, but if you check out Neurela, who make these drones, you can see how sophisticated their AI is, and how it can be used to ‘pick out faces in a crowd’ which is a sentence I really do not like.

✈️ Finally, if you can call this a use, you can use drones to confuse the shit out of everyone by setting them lose at Gatwick airport and bringing flights to a standstill. Not useful, but certainly interesting and hilarious.

As you can see this is quite a mixed bag. There are so many cases where new drone technology is being used for nice, cuddly things. But then, there are so many cases where those same technologies are being abused for mean and deathly reasons. Abused by who? I’ll say it again: humans. Fuck those guys (sorry, I’m getting emotional because I am a human too, unfortunately).

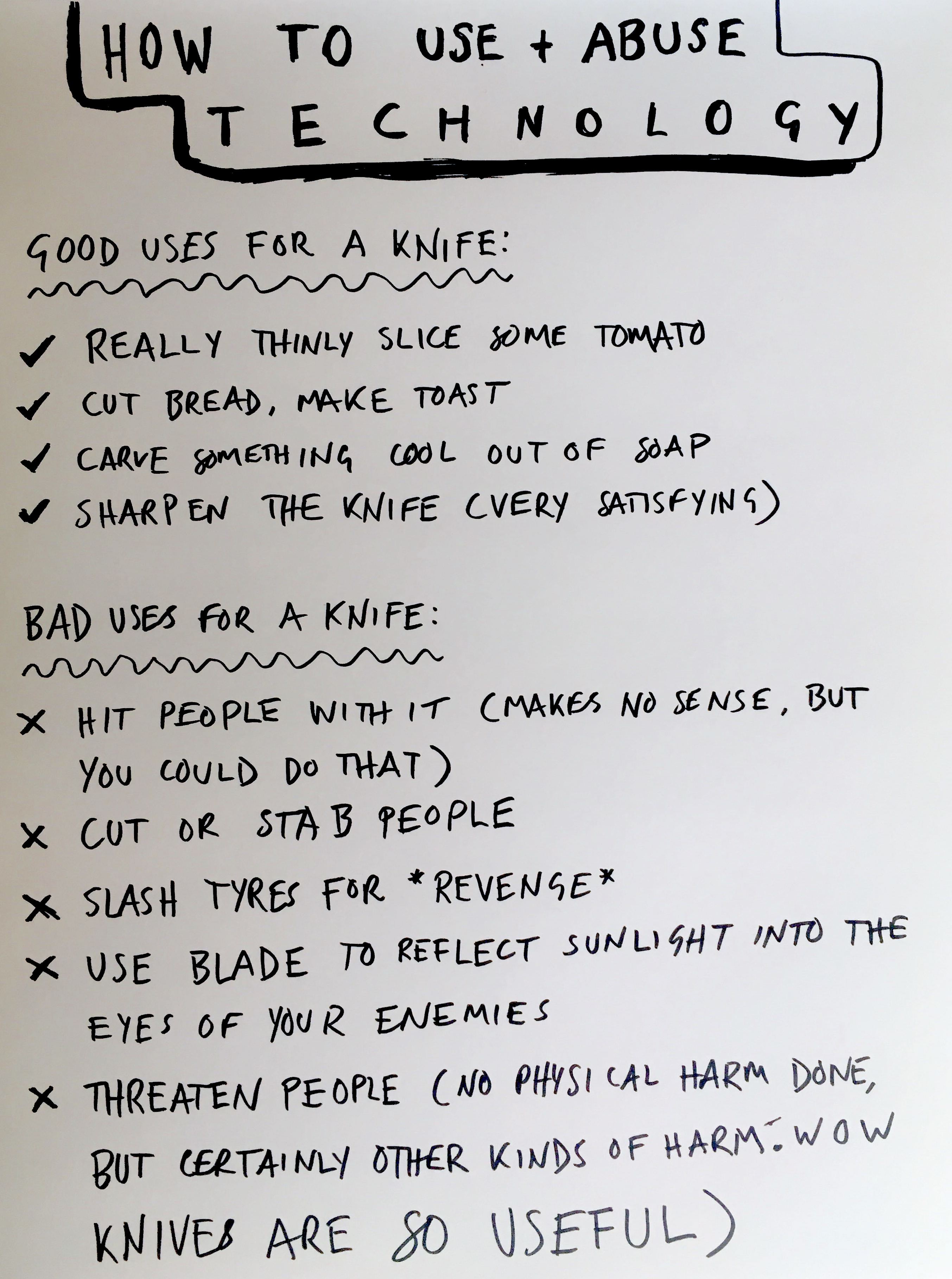

Gonna have to do a hard pass on banning drones, they’re too useful. Banning improper use of drones? Sounds difficult. No idea how we achieve that because I have not put years of research into why people commit crimes or do bad things. We’ve definitely banned stabbing each other with knives, but that hasn’t fully stopped people from stabbing each other with knives. Do you see?

Technology = power. Power = thing that can be abused

Okay: Facebook (exhales… been holding that word in for a while). They could choose to NOT use all that data they have on us for gross intrusions on our personal lives, couldn’t they? But they did choose that, so I guess that’s them.

When starting out, before he’d amassed terrifying levels of power, Mark Zuckerberg may never have had any bad intentions. But who cares because that is impossible to know, and good intentions are meaningless when they result in this many damaging mistakes.

The other issue with Facebook is that unpacking how it and Mark Zuckerberg have abused power and technology would be like trying to understand a Trump tweet. It’s just not worth it right now. Instead, I want to look at something that’s starting to get used more and more, and uses different kinds of technology to attribute different kinds of power to multiple parties. And that thing is: smart homes.

A ‘smart home’ is where you finally submit to technology like the product of the digital age that you are, and control your washing machine and fridge temperature and windows via, what I assume to be, a poorly designed app.

I don’t mean to shit all over smart homes but once again, as a human, my emotions are getting the better of me and I — perhaps irrationally — consider smart homes to be one of the first steps towards our dystopian technocratic future (but I’m probably wrong; I’m not that clever). Smart homes, right now, are obviously really convenient and cool if you need help around the house or are just really into that sort of thing.

Now, a common element of smart homes is a special doorbell that is hooked up to a camera which you can access via an app. With this, you only need to look at your phone to see who’s at the door. If you have the kind of camera door bell that has facial recognition, you can get it to remember good faces and bad faces. That means it will learn who your neighbours and delivery people are, and therefore be able to pick out strangers.

Training machines to trust and/or suspect humans is a pretty odd thing to do; trust is messy and difficult and often based on — once again — emotions. Humans have not yet even come close to mastering trust, and yet humans are the ones teaching machines how to trust. We all rationally understand (I hope) that every single stranger at your door is not there to attack you or steal your possessions. Equally, it’s surely possible that any one of your neighbours could steal from you or hurt you.

The face of a trustworthy neighbour

The face of a trustworthy neighbour

So, your smart home security system is a good idea, but it’s quite possibly poorly executed. Putting aside the fact that they may not even be an effective security tool, there is also a lot of scope to mess with this technology and use it to do bad. Here are some cases of misuse by various parties:

The people who make the technology misuse it in a number of ways. One way is the actual, point-blank access they have to security footage. Employees of Ring (owned by Amazon), for some reason can watch what goes on in your house, if they want. Well… that’s just GREAT.

Further to this, these companies don’t need to watch actual footage of you masturbating in order to invade your privacy. Just look at Hive’s privacy policy. Seeing as their products connect to an app, that gives them straight access to your device(s) which is the same as handing them over an encyclopaedia of your life.

“Details about the type of device (which can include unique device identifying numbers), its operating system, the browser you use and applications on the device that connect to our products and services. It can also include details of your internet service provider, mobile network and your IP address.”

That’s a lot of stuff that can very easily be used to narrow down who you are and what you like doing. This helps Hive and other companies chase you around the internet with even more advertising.

Another misuse case on the part of the makers of the technology are in tandem with those who use the technology. Ring have a social network side to their app creating a sort of digitised neighbourhood watch. Ring users can share images of who they deem ‘suspicious persons’, and these get put onto an official database that Amazon is building. Then, whenever someone turns up at a Ring doorstep, the camera uses facial recognition and sees if that person matches anyone on the database. There a bunch of problems here…

- Creating an online community within apps like Ring which employ facial recognition inevitably galvanises collective paranoia within the IRL community, and has a real ‘can I speak to your manager’ energy about it.

- On an individual level it could make it hard to trust anyone who comes to your door; surely being able to watch your own doorstep 24/7 only compounds paranoia?

- Amazon’s facial recognition software is known by AI experts to be rubbish; it’s bias against women and people of colour. At some point, it will misidentify people at your door as a ‘suspicious person’.

- Delivery people probably don’t want to be recorded on the job and then, possibly, profiled on a database of suspicious people? Maybe?

Finally, sitting solely on the users of the technology, is the inappropriate and dangerous surveillance of the people in your family/people whom you live with. If you’re a victim of domestic abuse, your abuser can keep track of you very easily if there are cameras set up in your home which they can control remotely via their phone. People assume that having cameras set up like this could help victims, but the only kind of domestic abuse that shows up on camera is physical violence and that is by no means where domestic abuse starts or ends.

I think that in the case of smart home surveillance, attributing perceived control and power to the individual (the user) has its problems, and these are not easily policed. It’s not as black and white as the wrong and right way to use a bread knife (the right way is to slice bread, the wrong way is to use it to hurt people).

However, I think more damage can be done by those who make this technology, because there seems to be nothing stopping them from turning on god mode, just like the Ring employees who can access your surveillance cameras and watch you dance naked to ABBA on repeat.

Think about it: an employee is just another human, and as we’ve covered, humans do bad things, especially when shrouded in anonymity. Snapchat employees have accessed data that they should not be able to get to so easily, and Lyft employees have abused their position to stalk celebrities and ex-partners.

“Behind the products we use everyday there are people with access to highly sensitive customer data, who need it to perform essential work on the service. But, without proper protections in place, those same people may abuse it to spy on users’ private information or profiles.” Joseph Cox, Vice

Blurring the lines between who has the power and control is where the danger lies. We can’t trust humans to do the right thing, so let’s use machines instead. But ultimately, humans design, control, and teach the machines. So… what then?

I’m not just intelligent, I’m artificially intelligent 😉

Humans teaching machines sounds a lot like machine-learning which is a very prominent part of AI at the moment. We’ve already talked about AI here, but only on the facial recognition side of things. The fear of AI is much like the fear of drones; both are extremely sophisticated, getting better all the time, and can are used is many ways that we do not fully understand.

The difference is, AI is kind of everywhere. Who remembers life before Citymapper? No, don’t think about it. What a terrible time. Anyway, Citymapper uses AI to work, of course. Have you ever received an email? AI was involved, because it can detect what is and isn’t spam. Have you ever met a DLR driver? That was a trick question, those don’t exist. The DLR is a driverless train. Although I don’t think this counts as AI, just really sophisticated automation.

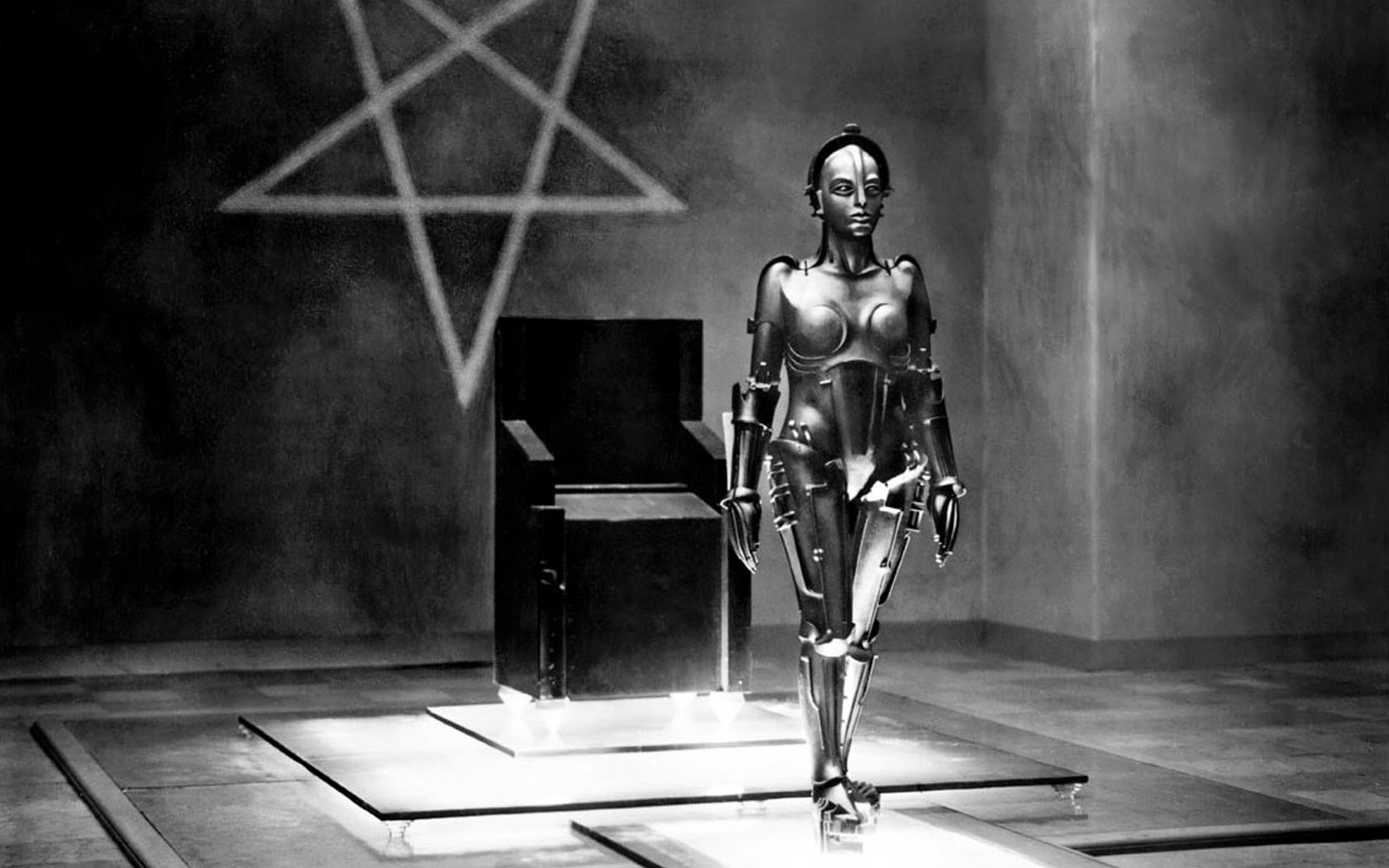

Automation is an important word here, because AI enables a lot of it. The fear of automation, machines, and AI is not new. This is obvious from how long it’s been present in popular culture: the first depiction of a robot (and essentially AI) in film was in Metropolis, and that came out nearly a hundred years ago.

The False Maria in Metropolis

The False Maria in Metropolis

Human fear of AI and automation can be boiled down to these things:

- Robots/machines/whatever start taking our jobs, we do a human VS machine uprising like in I, Robot

- Artificial Intelligence not sophisticated enough to do its job, gets things wrong, ruins lives

- Robots get too smart somehow and try to kill us (like the False Maria from Metropolis, like HAL from 2001: Space Odyssey, like fucking everything in The Matrix)

To address these points, I present you with driverless vehicles; an efficient and environmentally friendly way of moving your objects and humans around with ~ the power of AI ~. So okay, if you replace all cabs with driverless ones, all the cab drivers will lose their jobs. But the jobs that open up because of this are things like customer service, logistics, vehicle maintenance, and so on. So it’s very possible that AI and automation used in this way would create more jobs.

Driverless cars are a tough one. For one thing, driverless cars are just not ready yet. They’ve only just started being used in a really cautious, small-scale way. The Einride ones are level 4 smart but are travelling very short distances and aren’t even breaching 5mph. It’s possible that the technology is not ready yet. It is also possible that we are not ready yet. As point two stipulates, it’s very hard to ‘trust’ that a driverless vehicle, or anything using AI will do the right thing.

Our trust in machines is completely inconsistent because, as I may have mentioned, we are humans with emotions. Humans are terrible drivers and are constantly crashing into each other. Yet we do not trust driverless cars, which are specifically designed to not ever crash in to anything. BUT going back to Amazon’s Ring doorbells, we are happy to put our trust into an algorithm which has been proven to be bias. Stupid emotions.

What we’re getting here is not just an abuse of tech, but an abuse of tech that is not even good. Unfortunately, some jurisdictions in the USA are totally cool with using shoddy algorithms to predict criminal behaviour, and decide whether or not an individual should go to prison. It seems as though the prevailing attitude here is that ‘machines are always right’. Which is silly; we’re attributing the same amount of trust to algorithms like this as we would to, I dunno, a calculator.

The humans are the ones who feed the machines with data and teach them right and wrong, and ultimately it is humans who suffer the consequences (machines don’t suffer — they’re machines). In other words, we’re doing it to ourselves. Our grossly irresponsible mishandling of technology is what is taking us to these dark places. The technology existing is not the problem. It’s what you do with it.

François Chollet really hit the nail on the head in his article about his concerns with AI:

“I’d like to raise awareness about what really worries me when it comes to AI: the highly effective, highly scalable manipulation of human behavior that AI enables, and its malicious use by corporations and governments.” François Chollet

One particularly scary use of AI (which was touched on when I was talking about drones) is using it in the military. As we’ve learned, humans often make bad decisions so I do not think there is anything stopping the military from using AI to make it quicker and easier to kill people. So, what do we do when the AI makes a mistake and kills the wrong person? Who is to blame if ‘machines are always right’?

Now, I’m aware that I have not yet addressed point three, which is the fear that AI will get ‘too smart’ and try to kill or enslave us or whatever. That is because, when I asked one of our devs at Company what he thought about the possibility of a machine uprising, the response I got was this:

Yes, readers, this is how I ‘do research’

Yes, readers, this is how I ‘do research’

I expect that my question was not taken seriously because it seems so completely ridiculous. That could just be because we are very far from it being possible. But, it’s not impossible. If machines start to learn in the same way we do, and even start to reason, they could end up being like humans without emotions. Eek. Don’t think about it too much.

Sometimes, we’re just too dumb to even know what we’re doing.

Using unsophisticated algorithms to put people in jail is a dumb thing to do: because it’s dumb to rely on bad AI to make such tough and important decisions, and it’s dumb to use technology that you — a judge or lawmaker — do not fully understand.

One of the darkest and messiest sides to consistent abuse of tech by humans is humans who simply do not know what they’re doing, yet hold a lot of power. There’s a reason why Mark Zuckerberg was so able to make congress look so stupid when they were questioning him during the Cambridge Analytica scandal; they’re out of touch, and they don’t know how the internet works. And they should know.

I’d say that the majority of consumers have likely not yet grasped the nuances of how aggressive online tracking technologies really work. But I think the average consumer has a better idea than most politicians. This is wrong; the imbalance of knowledge should not be this stark, and those with the least knowledge should not be the ones in charge of entire nations.

And, just to ensure that knowledge gap is as wide as possible, governments (in the West at least) pay private companies to provide anything that sits outside of their technological remit (which is nearly everything; governments are shit at technology). This is an expensive and short-sighted way of doing things; I do not trust that governments even know what they are paying for.

What comes out of this is paralysing inability to deal with actual problems. In 2016 there was an increase in contraband coming into prisons via drones in the UK. Liz Truss, who was the Justice Secretary at the time, put forward barking dogs as a serious solution to this 🤦🏻♀️. No, Liz. Neither you nor any of your aides know what they’re doing. Just sit down. It’s nonsense like this that reassures all of us that politicians are bumbling fools who’ve only just worked their way up from VHS and need to hire assistants to print out their emails for them.

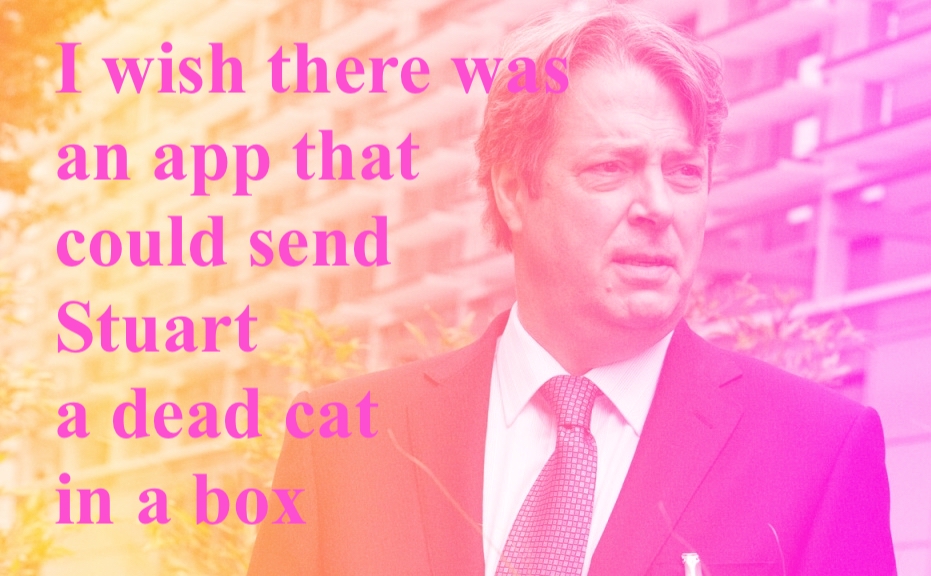

The whole thing smacks of the same energy as The Thick Of It which was once a fictional comedy, but is now more of a disturbingly accurate depiction of the lives of policy makers.

Peter Mannion from The Thick Of It coming up with a cool app idea

Peter Mannion from The Thick Of It coming up with a cool app idea

If those in charge actually knew how to harness the power of technology, we may be able to prevent drones from terrorising Gatwick, and awful, ill-thought out propositions for internet regulation. The lack of nuance and the overuse of the word ‘troll’ in this white paper has made me feel very uncomfortable.

We’d also probably see less of these mindless, blanket ‘solutions’ like that of the Sri Lankan government after the church bombings earlier this year. They thought it was a good idea to just ‘turn off’ social media and other messaging services for fear of more violence occurring. My guess is that no one considered that if any groups wanted to continue the violence, they’d probably call each other on the phone or just use a VPN. Also, how do you tell your loved ones that you’ve been injured in a bombing if you can’t even use WhatsApp?

This abuse of technology is arguably worse; those in power seem to either have a blind trust or a blind fear of tech, with little or no nuanced understanding of what it can actually do, and therefore waste time misusing it or ignoring it all together. It’s like forcing someone to churn out really basic easter cards using Indesign. That is completely the wrong tool and no one needs easter cards anyway 🙄

Put away your future-visions of an AI master-race for a sec…

…and remember that Scientologists exist. Did you know that the way in which Scientologists get rational humans to convert to Scientology, is by using a piece of ‘technology’? It’s called an E-meter and it’s covered with dials and buttons so it almost looks legit. Almost. In actuality it looks like this:

An E-meter; totally legit piece of kit

An E-meter; totally legit piece of kit

Yes it’s ugly and it looks like it can’t really do anything. That would be correct. The church of Scientology has been ordered by the federal courts to make clear that this thing ‘does nothing’. In previous times, Scientologists were claiming the E-meter could be used to perform certain kinds of therapy. It can’t.

Nowadays, if you actually want to be let on to the bridge to total freedom (I know) you have to buy an E-meter from the church itself. They actually suggest you buy TWO. In case one of them breaks, and you’re left completely meterless (which is such an embarrassing thought, I can’t even…). Interestingly, they cost about $5k. Why am I telling you all this? Well…

- Scientologists use these meters to ‘conduct audits’ of your emotional responses to questions (it’s a glorified lie detector, basically)

- If you want to be a Scientologist for real, you have to buy an E-meter, and those are only available to buy via the church of Scientology itself

- Scientology hides behind these bullshit contraptions to manipulate humans into thinking Scientology is not a scam, e.g: “we use these machines therefore we know what we are doing and you should just be a Scientologist because SCIENCE”

Something as stupid as one of these E-meters can be used to seem authoritative which leads to people trusting you. Just look at this Quora conversation; someone has given an answer to a question about E-meters, claiming the Scientology official website is a ‘reliable resource’. People think that this is a real thing, and that has a lot of power.

This type of technological abuse is extremely interesting because it helps us remember that it does not take all that much to convince a lot of people that you’re completely fake and basic-looking E-meter is worth $5000, and will give you passage over something called the bridge of total freedom. Humans are just FASCINATING.

While we’re in the realm stupid tech, let’s take a minute to remember the stuff that isn’t trying to destroy your life. What about this belt that charges your phone, or this egg holder that tells you when you’re running low on eggs. It also teaches you Japanese, will act as the co-founder of your Fortune 500 company, and compliment you on your outfit everyday. Just kidding — they’ve gone out of business.

Just as a final thought, technology can also be used to create things which exist above the harmful and useless; what about art? Hito Steyerl is a cool hip artist that people respect, and she used AI to create depictions of flowers will exist in a post-human future. The show was called Power Plants (get it?) and was at the Serpentine earlier this year

There’s also this piece which is currently at the Science Gallery and it uses AI translate the trending moods of political movements into colours visible on a wall-mounted metal plate. This is conversion of something completely intangible into visual information is such a fascinating, unexpected use of AI. It’s safe to assume that whoever developed the AI did not expect that this would be a way in which is was used.

So technology is like… humans?

Yes: both good and bad. For the same reasons why you can’t cancel all humans, you can’t cancel all technology. This means that while technology allows for the production of wonderful, wholesome things, it also facilitates the dark, sucking, joyless realms of society in which people are unnecessarily surveilled, innocently put in prison, or just plain scammed.

The ongoing abuse of technology is a desperately hard problem to solve — too few people know enough about tech, and most of those who do are the ones abusing it. How do you let humans appropriately harness the power that technology has to offer if this power is so easily subject to this level of abuse?